Build a "Chat with PDF/Doc/TextFiles" App using Spring AI and PostgreSQL And Docker

In this tutorial, we will build a RAG (Retrieval Augmented Generation) application. This allows users to upload their own documents (PDFs, Word Docs, Text files) and ask the AI questions based specifically on those files.

We will use Google Gemini (for cheap/free AI models), PostgreSQL (to store data), and Spring AI.

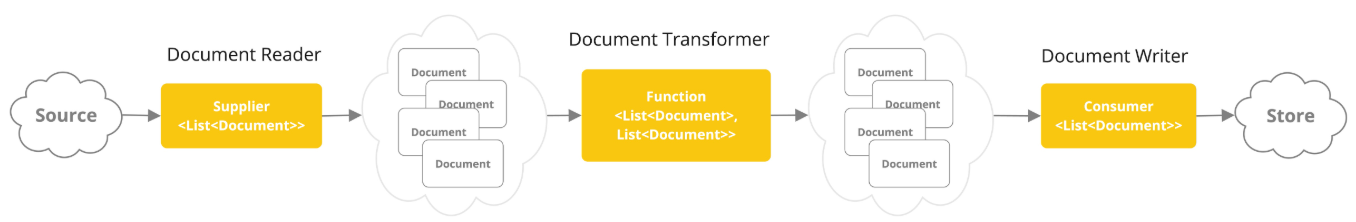

We will build a pipeline that:

- Extracts text from PDFs and Office documents using Apache Tika.

- Transforms the text into mathematical vectors (Embeddings).

- Loads them into a PostgreSQL (pgvector) database.

- Retrieves the data when a user asks a question via a REST API.

Tools and Technologies

- Java 21

- Spring Boot 3.4.x

- Spring AI 1.0.0+

- Database: PostgreSQL with

pgvectorextension (via Docker) - Docker: We use postgresSQL official image.

- AI Model: Google Gemini Flash (Fast & Cost-effective)

- Document Reader: Apache Tika

Development Steps

- Step 1: Project Configuration (Gradle)

- Step 2: Database Setup (Docker)

- Step 3: Application Properties

- Step 4: The ETL Service (Ingestion Pipeline)

- Step 5: The RAG Controller (Chat with Data)

- Step 6: How to Test This?

1. Step 1: Project Configuration (Gradle)

We need to add the Tika reader (for file parsing) and PGVector (for the database).

1. File: build.gradle

plugins { id 'java' id 'org.springframework.boot' version '3.4.1' // Use latest stable id 'io.spring.dependency-management' version '1.1.7'}group = 'com.planetlearning'version = '0.0.1-SNAPSHOT'java { toolchain { languageVersion = JavaLanguageVersion.of(21) }}repositories { mavenCentral() maven { url '[https://repo.spring.io/milestone](https://repo.spring.io/milestone)' } maven { url '[https://repo.spring.io/snapshot](https://repo.spring.io/snapshot)' }}ext { set('springAiVersion', "1.0.0-M5") // Check for latest Milestone}dependencies { implementation 'org.springframework.boot:spring-boot-starter-web' implementation 'org.springframework.boot:spring-boot-starter-data-jpa' // AI & Vector Store implementation 'org.springframework.ai:spring-ai-starter-model-google-genai' implementation 'org.springframework.ai:spring-ai-starter-vector-store-pgvector' // Tika Reader - Reads PDFs, DOCX, PPT, TXT automatically implementation 'org.springframework.ai:spring-ai-tika-document-reader' // Database runtimeOnly 'org.postgresql:postgresql' compileOnly 'org.projectlombok:lombok' annotationProcessor 'org.projectlombok:lombok' testImplementation 'org.springframework.boot:spring-boot-starter-test'}dependencyManagement { imports { mavenBom "org.springframework.ai:spring-ai-bom:${springAiVersion}" }}2. Step 2: Database Setup (Docker)

We need a specific version of PostgreSQL that supports vector math. We will use Docker Compose.

1. File: docker-compose.yml

Make these files in root folder same as your build.gradle file

make init.sql file (empty file)docker-compose.yml

services:

db:

image: pgvector/pgvector:pg16

container_name: planet-learning-db

restart: unless-stopped

ports:

- "5432:5432"

environment:

- POSTGRES_DB=vectordb

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=password

volumes:

- postgres_data:/var/lib/postgresql/data

- ./init.sql:/docker-entrypoint-initdb.d/init.sql

healthcheck:

test: ["CMD-SHELL", "pg_isready -U postgres"]

interval: 10s

timeout: 5s

retries: 5

volumes:

postgres_data:Run this command to start the DB:

docker-compose up -d3. Step 3: Application Properties

Configure your Google API key and database connection. Spring AI will automatically try to create the vector table for you.

1. File: src/main/resources/application.properties

xxxxxxxxxxspring.application.name=SpringAiRag# --- Database Config ---spring.datasource.url=jdbc:postgresql://localhost:5432/vectordbspring.datasource.username=postgresspring.datasource.password=passwordspring.datasource.driver-class-name=org.postgresql.Driver# --- AI Config ---spring.ai.google.genai.api-key=YOUR_GOOGLE_GEMINI_KEY_HEREspring.ai.google.genai.chat.options.model=gemini-1.5-flash# --- Vector Store Config ---# This tells Spring to create the table structure automaticallyspring.ai.vectorstore.pgvector.initialize-schema=true# Dimension for Gemini Embeddings (Important!)spring.ai.vectorstore.pgvector.dimensions=7684. Step 4: The ETL Service (Ingestion Pipeline)

This service reads your files (PDFs, Text, etc.), splits them into small pieces (chunks), and saves them into the database.

1. File: src/main/java/com/planetlearning/rag/service/EtlService.java

xxxxxxxxxxpackage com.planetlearning.rag.service;import jakarta.annotation.PostConstruct;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import org.springframework.ai.document.Document;import org.springframework.ai.reader.tika.TikaDocumentReader;import org.springframework.ai.transformer.splitter.TokenTextSplitter;import org.springframework.ai.vectorstore.VectorStore;import org.springframework.beans.factory.annotation.Value;import org.springframework.core.io.Resource;import org.springframework.stereotype.Service;import java.util.List;public class EtlService { private static final Logger logger = LoggerFactory.getLogger(EtlService.class); private final VectorStore vectorStore; // Load files from the "resources" folder ("classpath:docs/company_policy.pdf") private Resource pdfResource; ("classpath:docs/notes.txt") private Resource txtResource; public EtlService(VectorStore vectorStore) { this.vectorStore = vectorStore; } // You can call this manually via an endpoint, or on startup public void ingestFiles() { logger.info("--- Starting ETL Pipeline ---"); List<Resource> resources = List.of(pdfResource, txtResource); // 1. Transformer: Split long text into small AI-readable chunks TokenTextSplitter splitter = new TokenTextSplitter(); for (Resource resource : resources) { if (!resource.exists()) { logger.warn("File not found: {}", resource.getFilename()); continue; } logger.info("Processing: {}", resource.getFilename()); // 2. Extract: Use Tika to read ANY file type (PDF, DOCX, TXT) TikaDocumentReader reader = new TikaDocumentReader(resource); List<Document> rawDocs = reader.get(); // 3. Transform: Split docs into chunks List<Document> splitDocs = splitter.apply(rawDocs); // 4. Load: Store embeddings in Postgres vectorStore.add(splitDocs); logger.info("Saved {} chunks for file: {}", splitDocs.size(), resource.getFilename()); } logger.info("--- ETL Pipeline Completed ---"); }}5. Step 5: The RAG Controller (Chat with Data)

This is where the magic happens. We use the QuestionAnswerAdvisor. This component automatically searches your database for relevant info and adds it to the prompt before sending it to Google Gemini.

1. File: src/main/java/com/planetlearning/rag/controller/RagController.java

xxxxxxxxxxpackage com.planetlearning.rag.controller;import com.planetlearning.rag.service.EtlService;import org.springframework.ai.chat.client.ChatClient;import org.springframework.ai.chat.client.advisor.QuestionAnswerAdvisor;import org.springframework.ai.vectorstore.VectorStore;import org.springframework.web.bind.annotation.*;("/api/v1/rag")public class RagController { private final ChatClient chatClient; private final EtlService etlService; // We inject VectorStore to pass it to the Advisor public RagController(ChatClient.Builder builder, VectorStore vectorStore, EtlService etlService) { this.etlService = etlService; // Build the ChatClient with the RAG Advisor this.chatClient = builder .defaultSystem("You are a smart assistant. You must answer questions ONLY based on the provided documents. If the answer is not in the documents, say 'I don't know'.") .defaultAdvisors(new QuestionAnswerAdvisor(vectorStore)) // <--- The Magic Line .build(); } // Endpoint to trigger data loading ("/ingest") public String ingestData() { etlService.ingestFiles(); return "Documents parsed and stored in database!"; } // Endpoint to chat ("/chat") public String chat( String query) { return chatClient.prompt() .user(query) .call() .content(); }}6. Step6: How to Test This?

How to Test This?

- Prepare Files: Create a folder

src/main/resources/docsand put a PDF inside (e.g.,company_policy.pdf). - Start DB: Run

docker-compose up -d. - Run App: Start the Spring Boot Application.

- Ingest Data:

- Open Postman.

POST http://localhost:8080/api/v1/rag/ingest- Result: "Documents parsed and stored..." (Check your logs to see chunk counts).

- Ask a Question:

GET http://localhost:8080/api/v1/rag/chat?query=What is the policy on remote work?- Result: The AI will answer based specifically on the PDF you uploaded.